Job Configurations

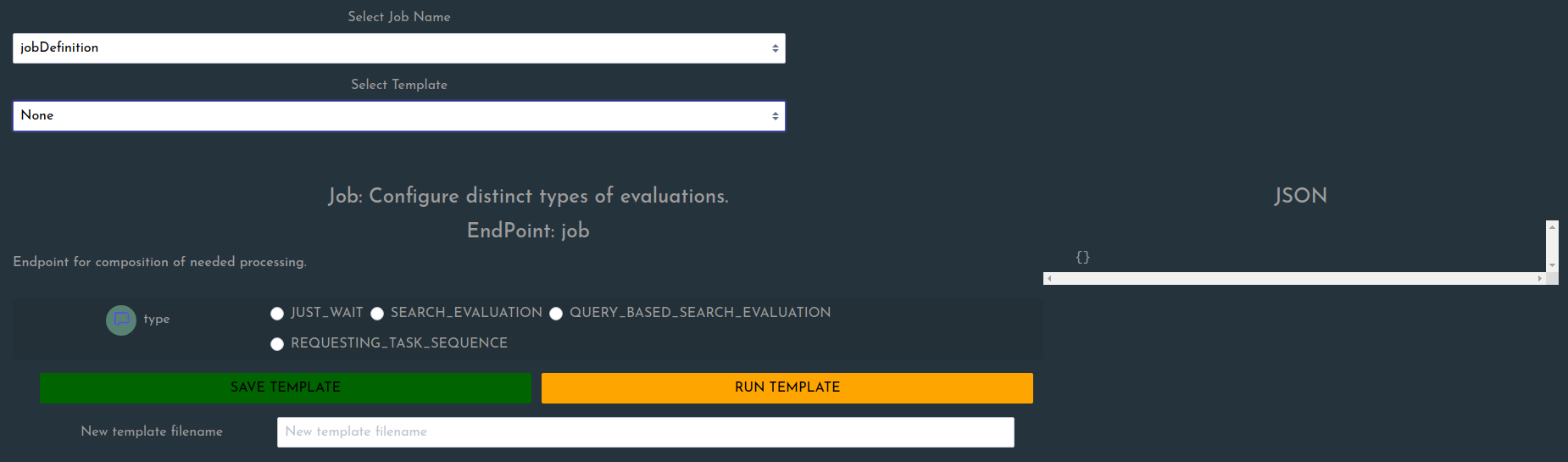

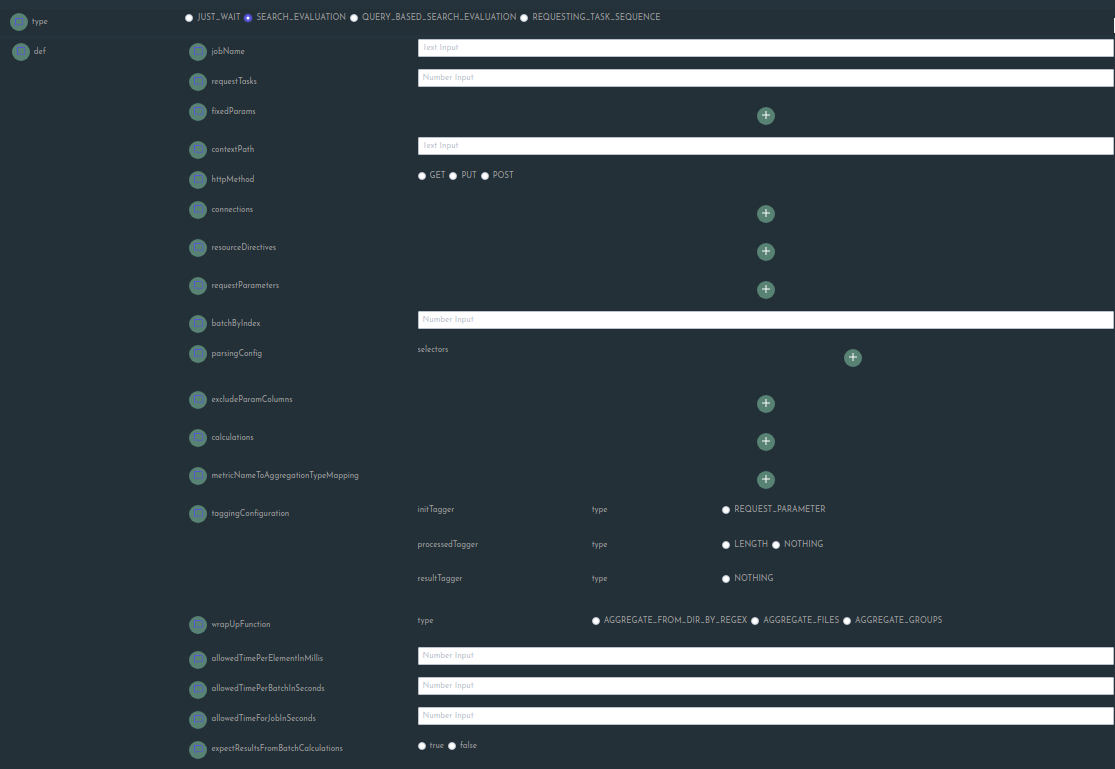

Currently, there are four types of job definitions:

| Job Definition Types | |

|---|---|

| JUST_WAIT | Dummy job. Only use this if you want to test the claiming of single batches by the nodes. The batches here only implement a wait-interval. |

| SEARCH_EVALUATION | Legacy search evaluation definition with all configuration options. Main format of definition till v0.1.5, thus left in for compatibility reasons. Recommended and most flexible way ifsREQUESTING_TASK_SEQUENCE. |

| QUERY_BASED_SEARCH_EVALUATION | Legacy search evaluation definition with reduced configuration options and some predefined settings. Main shortened format of definition till v0.1.5, thus left in for compatibility reasons. Recommended and most flexible way ifsREQUESTING_TASK_SEQUENCE. |

| REQUESTING_TASK_SEQUENCE | Build your processing pipeline by defining a sequence of tasks. Provides most flexibility. Recommended way of defining jobs. |

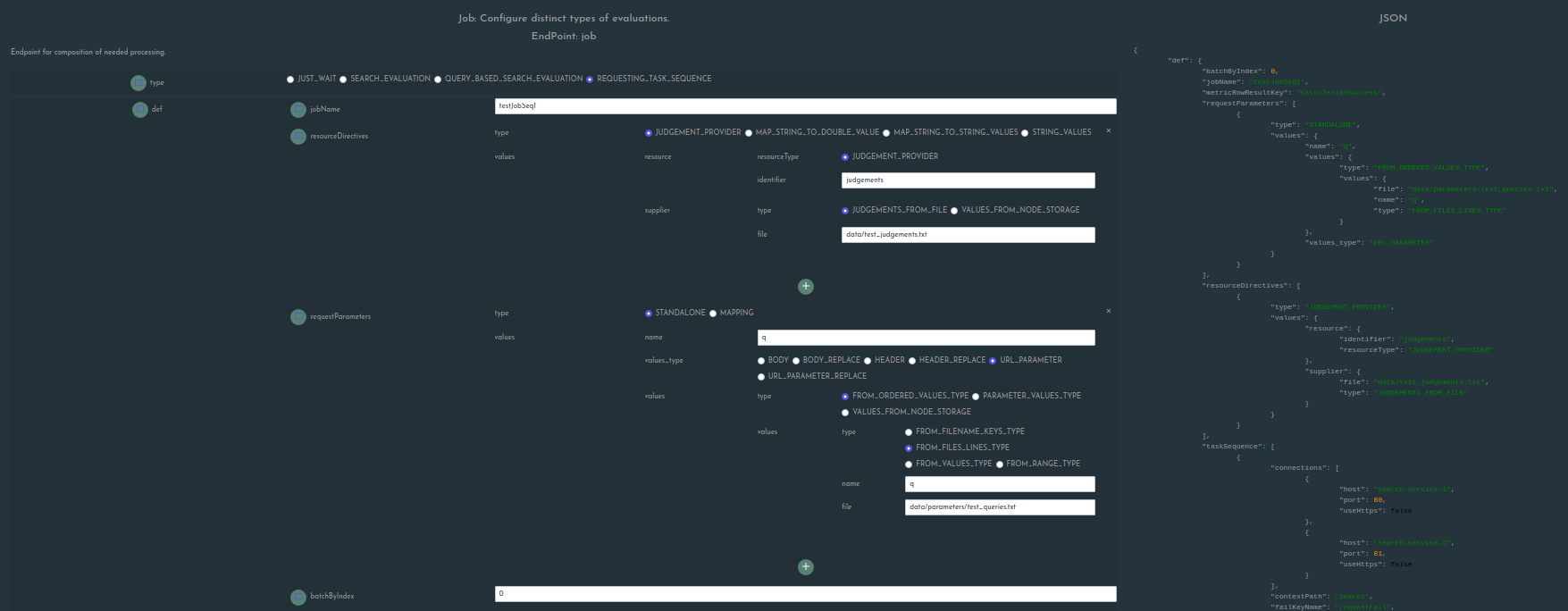

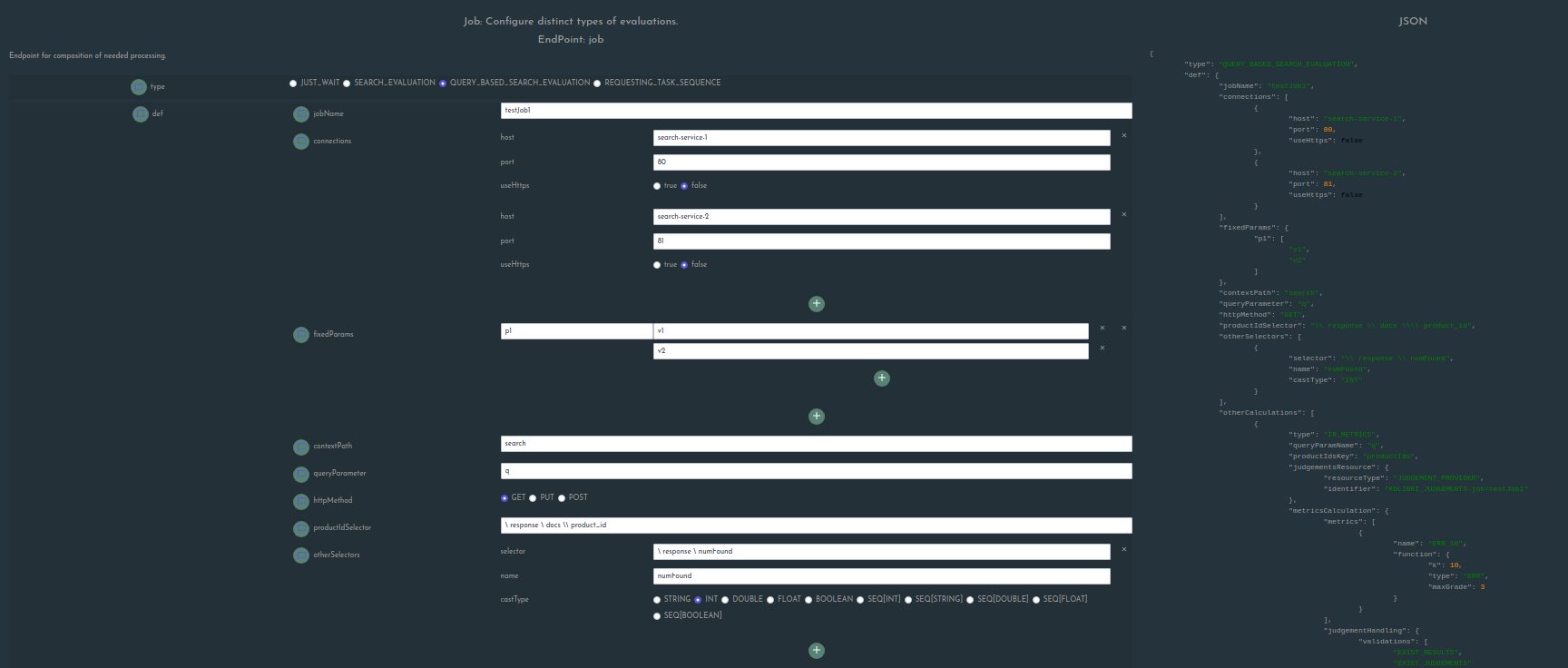

Actual UI selection screen:

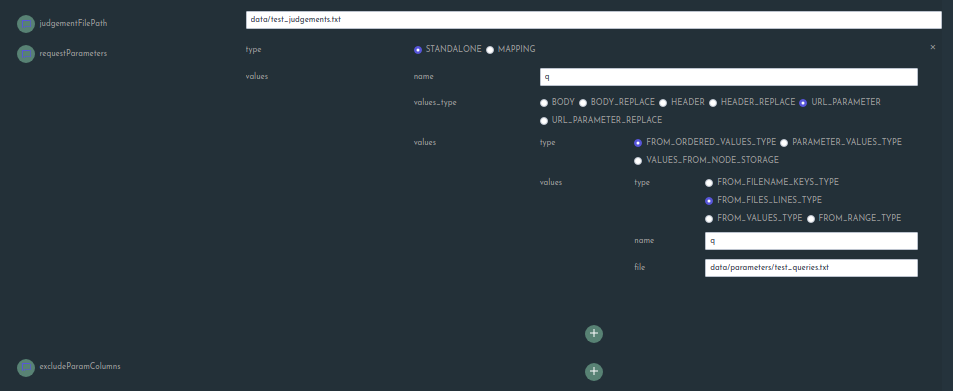

Note that for the attribute excludeParamColumns you need to fill in something, which can also just be an empty list.

In case you want to keep an empty list, add an entry and delete it again in the UI such that an empty array is generated in the json

representation to the right. This is a temporary workaround due to the fact that the current config sees this field as mandatory.

Let’s walk over the job definitions defining actual computation.

In the below those attributes that are purely legacy fields and not causing any effect (you will still need to set them in some cases) are marked with (Not used). Fields with separate section here in the documentation for more details are marked with (*).

SEARCH_EVALUATION

This definition is exists to provide backward-compatibility to job definitions that were composed up to kolibri v0.1.5,

when the task-sequence configuration has not yet been provided. For new configurations we recommend using the REQUESTING_TASK_SEQUENCE

type, as it provides the most flexibility. Definitions submitted for the SEARCH_EVALUATION type are converted internally

to a task sequence.

The single fields are as follows:

| SEARCH_EVALUATION fields | |

|---|---|

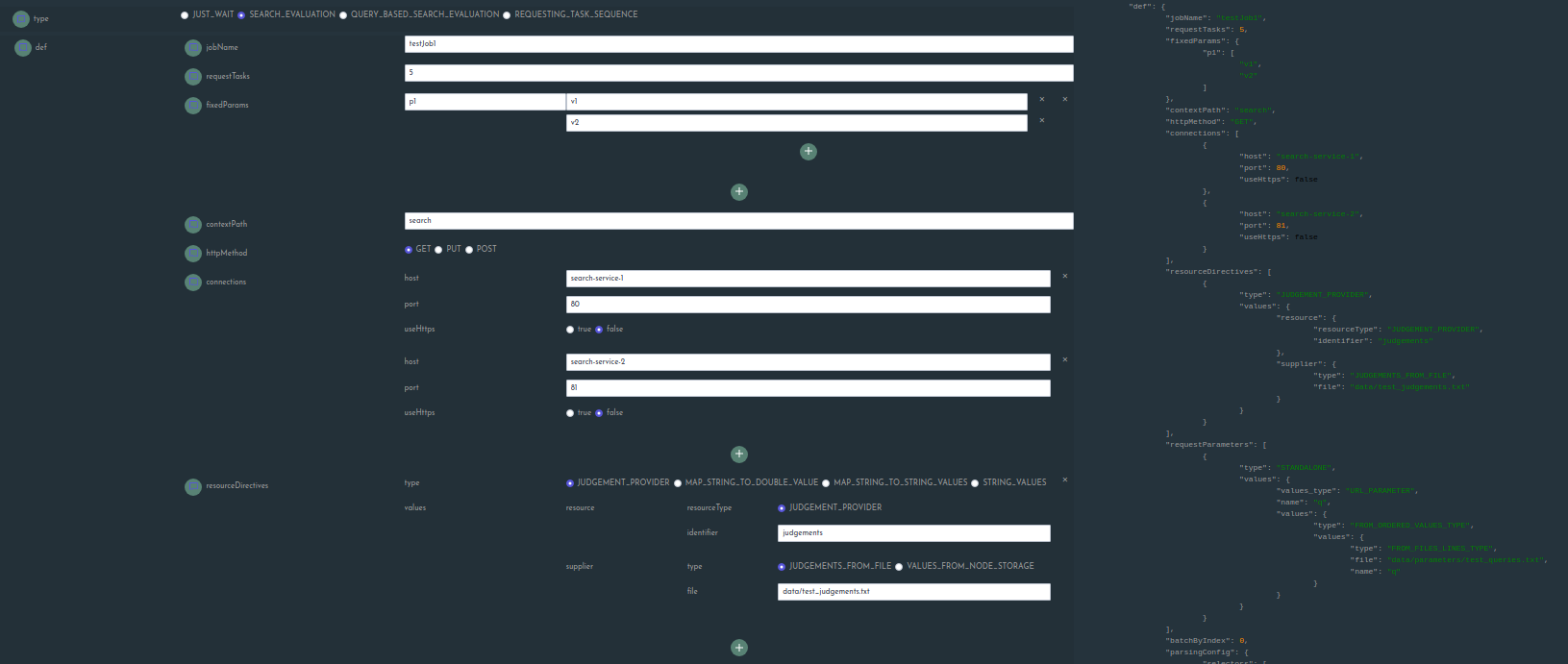

| jobName | Simply the name of the job. Prohibited character: underscore. |

| requestTasks | (Not used): Integer, defining how many batches are processed in parallel. |

| fixedParams | Definition of parameter settings that are applied on every request. You can assign multiple values to a single parameter name, they will be all set in the request. |

| contextPath | The url context path used for requests. |

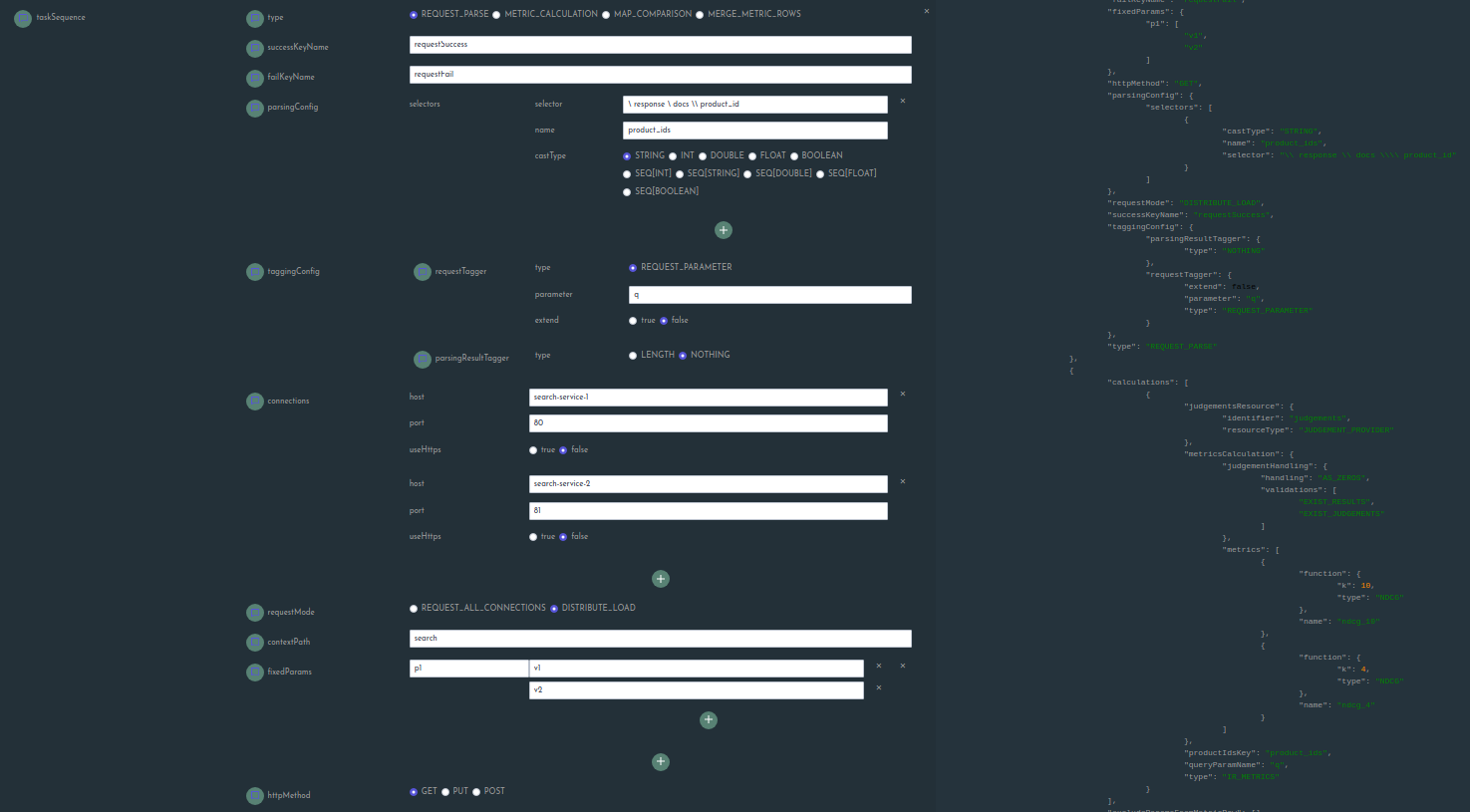

| httpMethod | The http method to use, choose between GET, PUT, POST. |

| connections | Define or or more target urls by defining the host (without protocol prefix, e.g not ‘http://search-service’ but ‘search-service’), the port, whether to use http or https). If multiple connections are defined, load will be distributed among them. |

| (*) resourceDirectives | Resource directives are configurations of resources that shall be loaded centrally on each node, and are only removed after the node does not have any batch running anymore that relates to a job for which the resources were defined. Larger resources should be loaded this way, such as judgement lists or extensive parameter mappings. Right now the options are available: JUDGEMENT_PROVIDER, MAP_STRING_TO_DOUBLE_VALUE, MAP_STRING_TO_STRING_VALUES, STRING_VALUES |

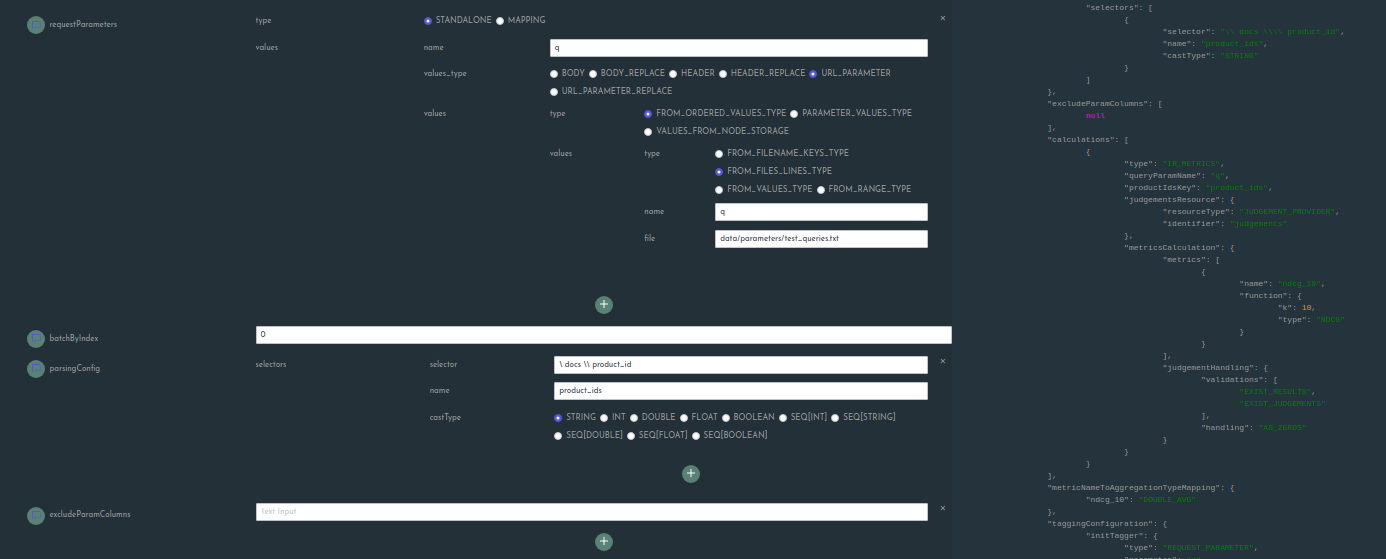

| (*) requestParameters | Defines the sequence of parameters (STANDALONE or MAPPING) that are permutated to generate the whole range of requests to evaluate. While parameters of type STANDALONE are just iterated over, MAPPINGS provide the option to limit the permutation space by defining a parameter generating key values and one or more mappings that are either mapped to the key value or any other mapped parameter configured before. More details in the separate documentation section. |

| batchByIndex | Specifies the 0-based index of the above requestParameters list to define the parameter by which to batch the job (e.g the query-parameter is a natural parameter to batch by usually). |

| (*) parsingConfig | The parsing configuration specifies which fields to parse from each response (response is assumed to be of json format). |

| excludeParamColumns | Sometimes it makes sense to exclude certain parameters from the parameters that are used to group results for each row in the calculation result. One use case is to exclude the query parameter if the batching is done by each query to avoid redundant entries. Another case is if we make use of the *_REPLACE parameter types, which are used to replace substrings in other parameters. |

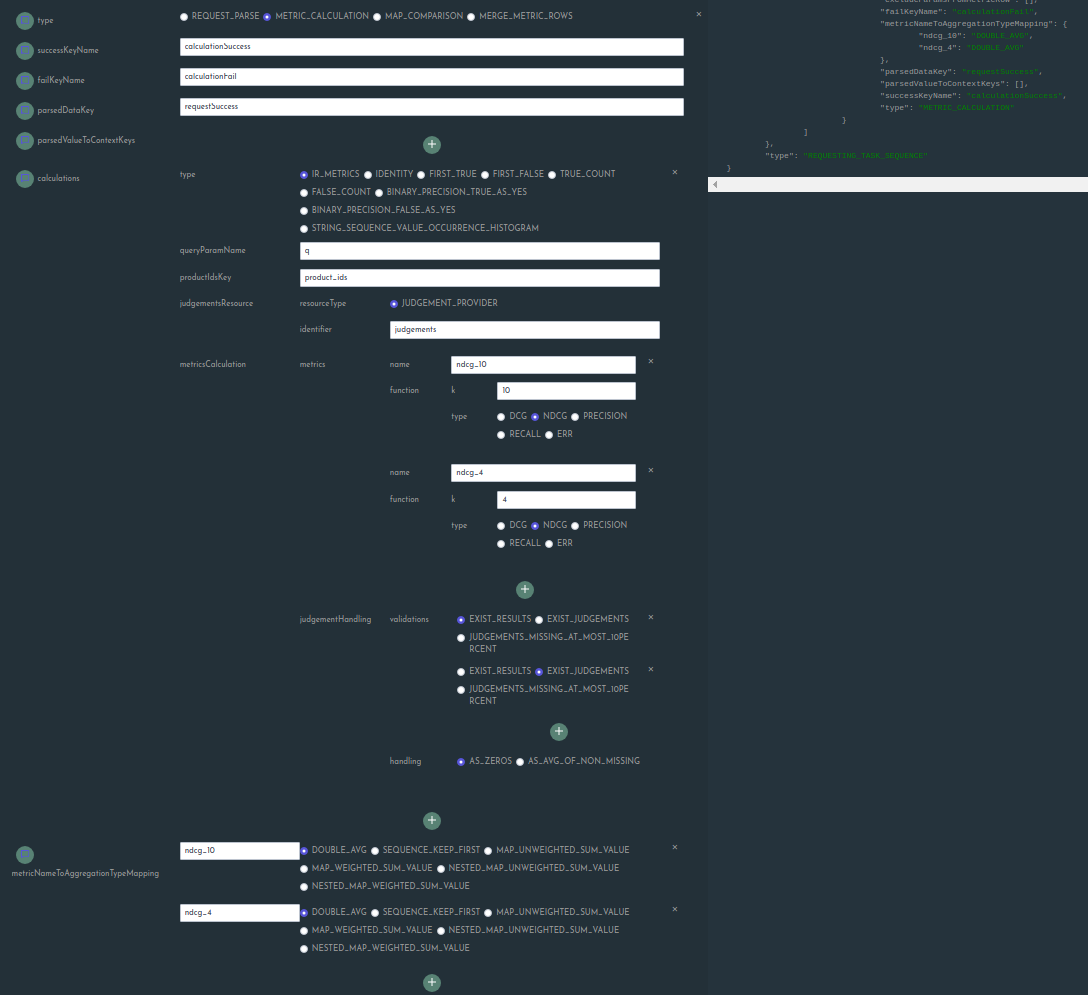

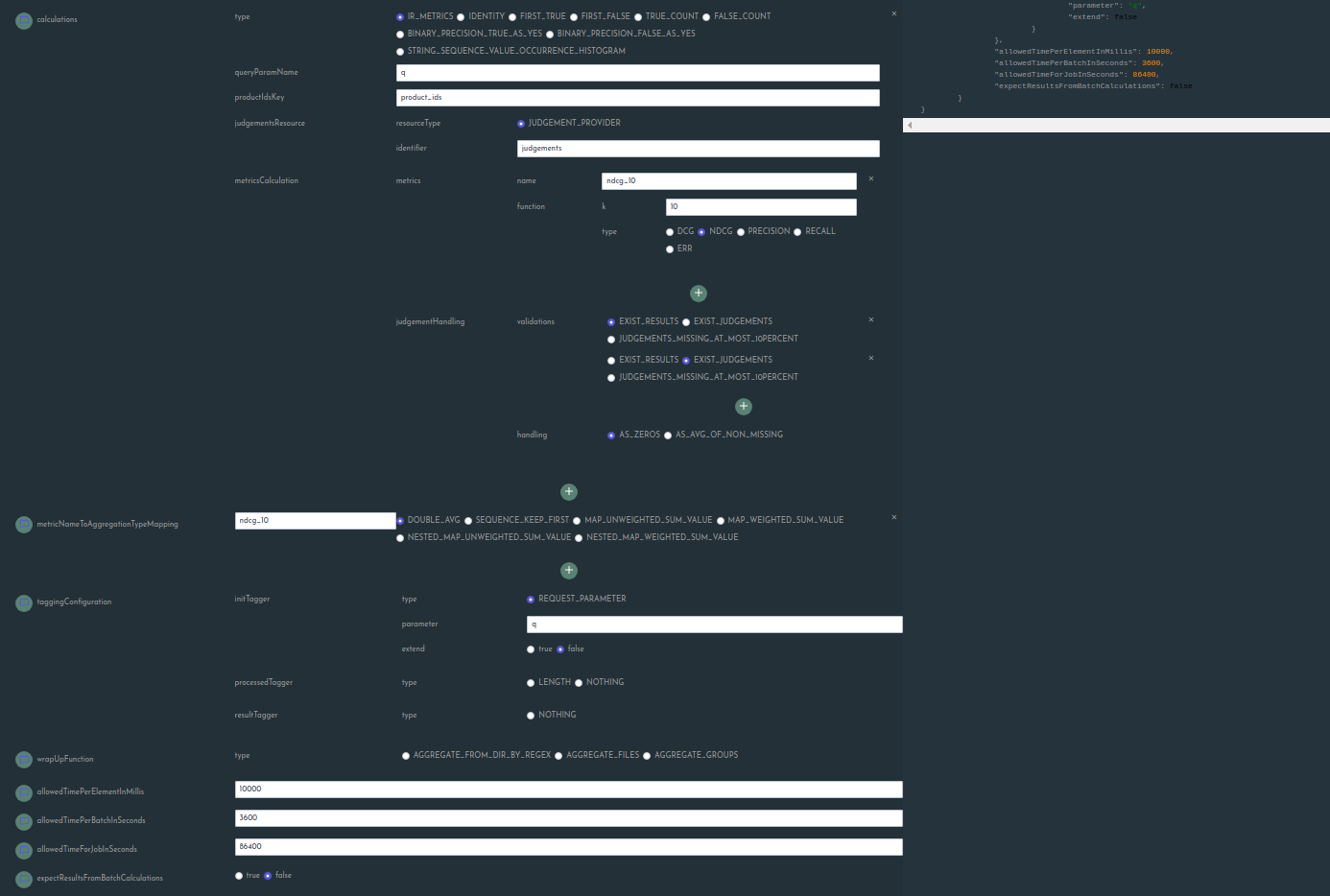

| (*) calculations | Sequence of different types of calculations to perform per request. Options include: IR_METRICS, IDENTITY, FIRST_TRUE, FIRST_FALSE, TRUE_COUNT, FALSE_COUNT, BINARY_PRECISION_TRUE_AS_YES, BINARY_PRECISION_FALSE_AS_YES, STRING_SEQUENCE_VALUE_OCCURRENCE_HISTOGRAM. For a detailed description see the separate section in this documentation. |

| (*) metricNameToAggregationTypeMapping | Assignment of metric name to the applicable aggregation type. Common IR metrics should be assigned to DOUBLE_AVG, and histogram would be of type NESTED_MAP_UNWEIGHTED_SUM_VALUE (just summing up occurrences without using sample weights). If nothing is defined, default value assigned will be DOUBLE_AVG, so do not forget to assign the right types in case this does not apply. You will usually not need any types other than the mentioned ones, but the full range of options are: DOUBLE_AVG, SEQUENCE_KEEP_FIRST, MAP_UNWEIGHTED_SUM_VALUE, MAP_WEIGHTED_SUM_VALUE, NESTED_MAP_UNWEIGHTED_SUM_VALUE, NESTED_MAP_WEIGHTED_SUM_VALUE. |

| (*) taggingConfiguration | Set distinct tagging options. Per tagger you need to select whether to make it extending, that is whether a separate tag shall be added or the tag shall extend existing ones (see extend) attribute. Allows definition for distinct stages of processing as initTagger (tagging on request template), processedTagger (acting on the response) and resultTagger (acting on the generated response). Yet right now only two options are implemented, one by request parameter and the other by result length. |

| (*) wrapUpFunction | Allows definition of processing steps to execute after the job is finished (this is part of the job-wrap-up task that is claimed by the nodes). See the section on task definitions for more details. |

| allowedTimePerElementInMillis | (Not used): Timeout for each single element per batch. |

| allowedTimePerBatchInSeconds | (Not used): Timeout for the whole batch (e.g for the sum of time the single elements in a batch take up). |

| allowedTimeForJobInSeconds | (Not used): Timeout for the whole job (that is processing of all batches contained in the job). |

| expectResultsFromBatchCalculations | (Not used): Was used in the akka-based variant to decide whether the central supervisor needs the result (e.g for an overall aggregation) or an empty confirmation is enough (such as when results of batches are stored in central storage anyways). |

A fully configured form can look like this:

QUERY_BASED_SEARCH_EVALUATION

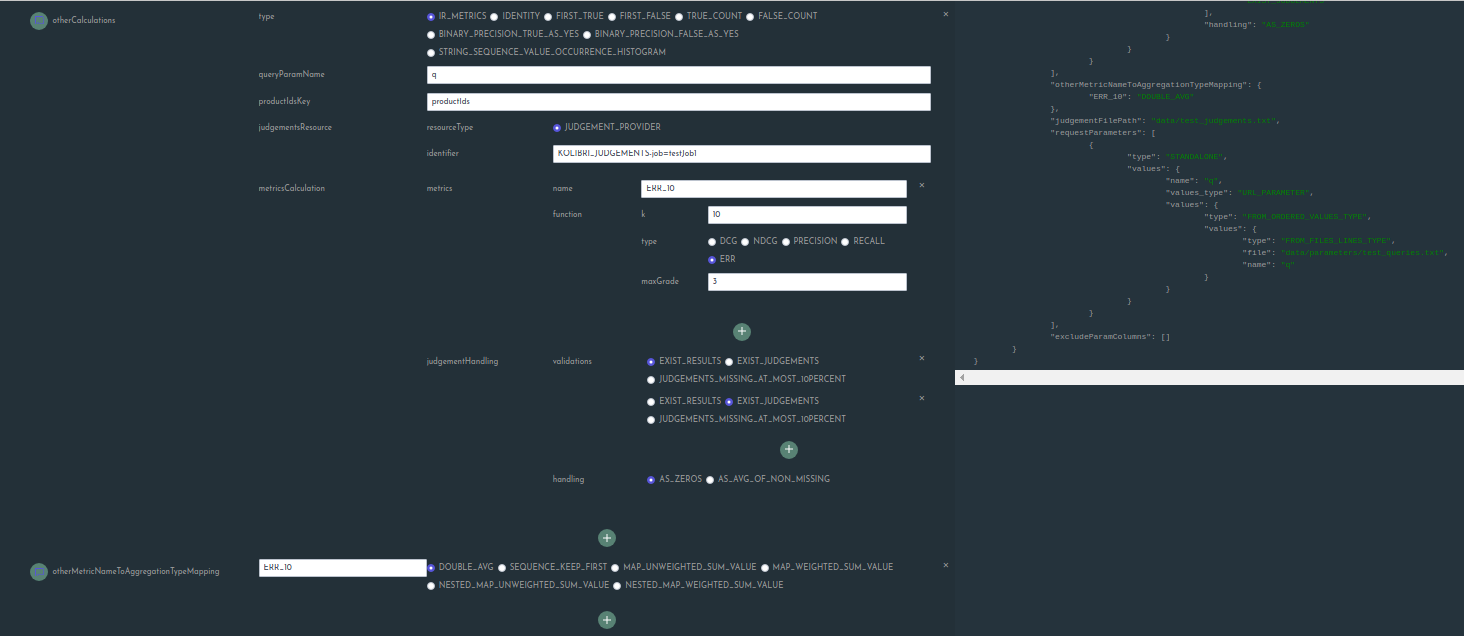

Note that this configuration option contains some pre-defined configurations. This includes a range of IR metrics (more details below).

Due to this, there is a predefined key under which the judgements list is loaded, and you will need to use the same key in the

definition of otherCalculations in case you want to add more IR metrics. The key format is KOLIBRI_JUDGEMENTS-job=[jobName], where jobName is the

name configured in the jobName field. Further, the setting productIdsKey needs to be set to the value productIds.

| QUERY_BASED_SEARCH_EVALUATION fields | |

|---|---|

| jobName | Simply the name of the job. Prohibited character: underscore. |

| connections | Define or or more target urls by defining the host (without protocol prefix, e.g not ‘http://search-service’ but ‘search-service’), the port, whether to use http or https). If multiple connections are defined, load will be distributed among them. |

| fixedParams | Definition of parameter settings that are applied on every request. You can assign multiple values to a single parameter name, they will be all set in the request. |

| contextPath | The url context path used for requests. |

| queryParameter | The name of the parameter defined unter requestParameters that provides the queries. |

| httpMethod | The http method to use, choose between GET, PUT, POST. |

| (*) productIdSelector | The selector to extract the productIds from the response json. See the separate documentation section on selectors. |

| (*) otherSelectors | Allows definition of further extractors to include additional fields. |

| (*) otherCalculations | Define additional calculations besides the pre-defined ones (see below). |

| otherMetricNameToAggregationTypeMapping | Define the aggregation type mappings for the additional metrics you configured in otherCalculations. |

| judgementFilePath | The file path where the judgements reside (relative path compared to configured base path). |

| (*) requestParameters | Defines the sequence of parameters (STANDALONE or MAPPING) that are permutated to generate the whole range of requests to evaluate. While parameters of type STANDALONE are just iterated over, MAPPINGS provide the option to limit the permutation space by defining a parameter generating key values and one or more mappings that are either mapped to the key value or any other mapped parameter configured before. More details in the separate documentation section. |

| excludeParamColumns | Sometimes it makes sense to exclude certain parameters from the parameters that are used to group results for each row in the calculation result. One use case is to exclude the query parameter if the batching is done by each query to avoid redundant entries. Another case is if we make use of the *_REPLACE parameter types, which are used to replace substrings in other parameters. |

Pre-Defined Settings

Some of the settings are already predefined in this configuration type. Let’s see which.

- productIds are stored under the key

productIds. - the judgement data is loaded in a resource with the key

KOLIBRI_JUDGEMENTS-job=[jobName], where[jobName]corresponds to the job name configured. - batching happens on index 0, thus in case you want to batch on your query parameter, make sure you configure it at first position in the parameter list.

- a bunch of IR metrics are pre-defined, those are:

- NDCG@2

- NDCG@4

- NDCG@8

- NDCG@12

- NDCG@24

- PRECISION@k=2,t=0.2 (t=0.2 stands for threshold to decide between relevant / non-relevant is 0.2)

- PRECISION@k=4,t=0.2

- RECALL@k=2,t=0.2

- RECALL@k=4,t=0.2

- judgement handling strategy is set to

EXIST_RESULTS_AND_JUDGEMENTS_MISSING_AS_ZEROS- validation done based on existence of results (if no results exist (0 result hit) the result is marked as failed and recorded as such)

- in case a product does not have an existing judgement for a query, the judgement value of 0.0 will be used.

Note that in the

SEARCH_EVALUATIONdefinition format you can select an alternative (such as average of non-missing judgements, to see unknown products for the query as of average quality)

- tagging is only done based on query parameter

- the configured wrapUpFunction (executed after all batches completed) aggregates all generated partial results into an overall aggregation, where each sample gets equal weight (1.0)

A completed form could look like this:

REQUESTING_TASK_SEQUENCE

This is the recommended and most flexible way of composing computations.

The fields are:

| REQUESTING_TASK_SEQUENCE fields | |

|---|---|

| jobName | Simply the name of the job. Prohibited character: underscore. |

| (*) resourceDirectives | Resource directives are configurations of resources that shall be loaded centrally on each node, and are only removed after the node does not have any batch running anymore that relates to a job for which the resources were defined. Larger resources should be loaded this way, such as judgement lists or extensive parameter mappings. Right now the options are available: JUDGEMENT_PROVIDER, MAP_STRING_TO_DOUBLE_VALUE, MAP_STRING_TO_STRING_VALUES, STRING_VALUES |

| (*) requestParameters | Defines the sequence of parameters (STANDALONE or MAPPING) that are permutated to generate the whole range of requests to evaluate. While parameters of type STANDALONE are just iterated over, MAPPINGS provide the option to limit the permutation space by defining a parameter generating key values and one or more mappings that are either mapped to the key value or any other mapped parameter configured before. More details in the separate documentation section. |

| batchByIndex | Specifies the 0-based index of the above requestParameters list to define the parameter by which to batch the job (e.g the query-parameter is a natural parameter to batch by usually). |

| (*) taskSequence | List of actual tasks to compute. Tasks defined at index i will have access to data generated in the tasks from index [0, i-1]. For a more detailed description of the options, see the respective section. |

| metricRowResultKey | The key of the generated result under which to find the end result of type MetricRow. For this to be available, any task in the task sequence needs to compute such an object and store it in the result map under the configured key. See METRIC CALCULATION task for an example. |

Let’s look at a completed example that provides a sequence of REQUEST_PARSE and METRIC_CALCULATION tasks to request one or many target systems and

calculate metrics from the retrieved results: