Actually useful examples Pt2 - Comparing search systems

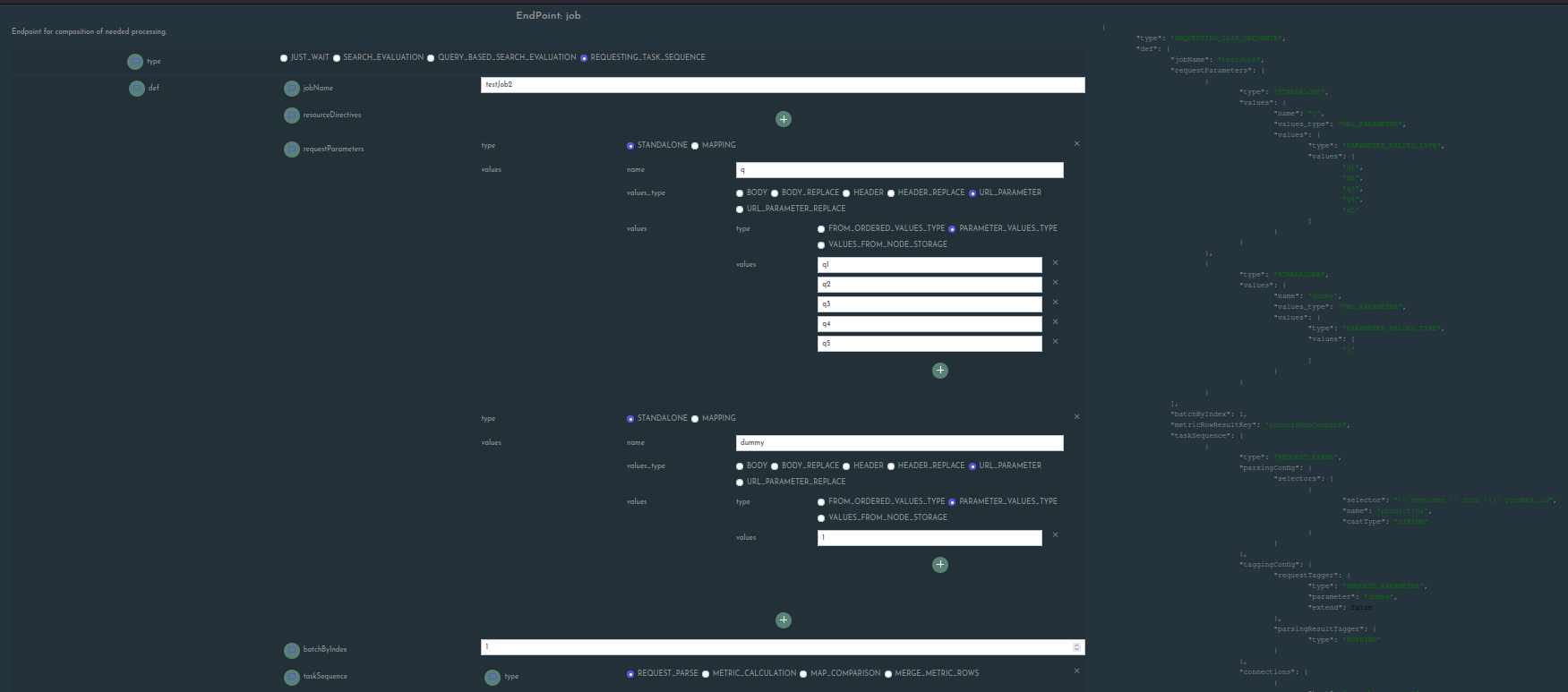

Configuring the Job

We have seen a general job configuration in the previous section. Now we want to focus on the configuration

for the task of comparing two or more distinct search systems.

In this example we will use jaccard metrics for this purpose.

What we will need to configure here is:

- which parameter settings do we need to form the parameter-permutations that define the http requests

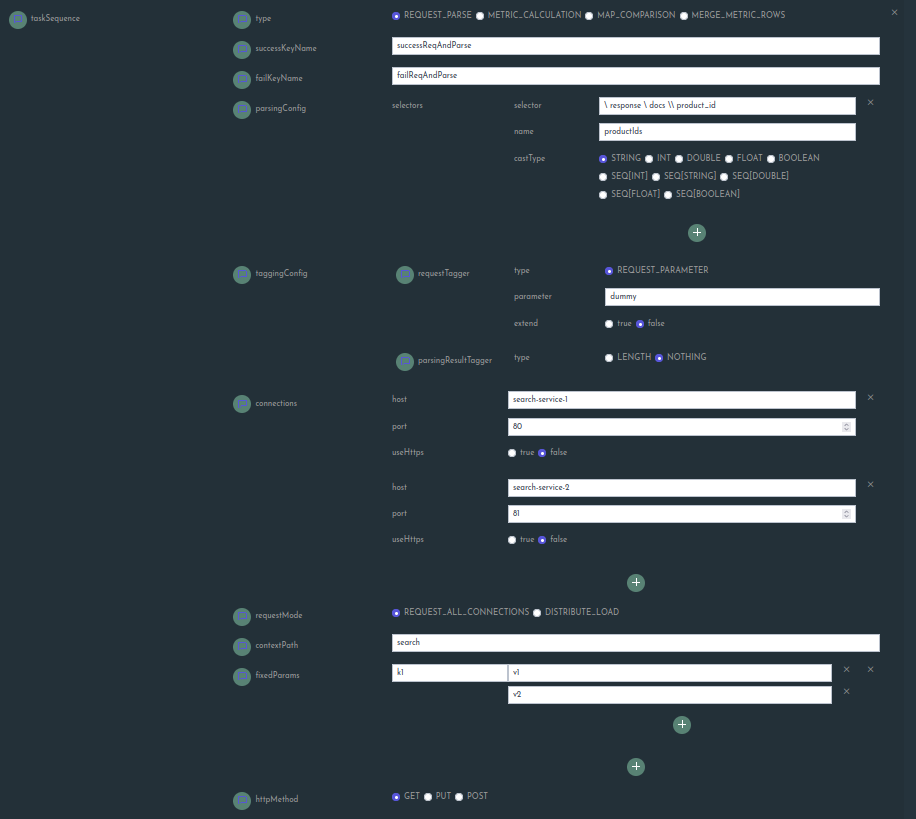

- a

REQUEST_PARSEtask that specifies the search systems of interest within theconnectionssettings and specifies asREQUEST_MODEthe valueREQUEST_ALL_CONNECTIONS. This causes requests be sent to all search systems (as opposed toDISTRIBUTE_LOAD, which balances the load among all defined connections), and stores the results for each system under[successKeyName]-[connectionIndex], where[successKeyName]refers to the value specified in below task definition and[connectionIndex]is the (1-based!) index of the respective connection the request was sent to - a

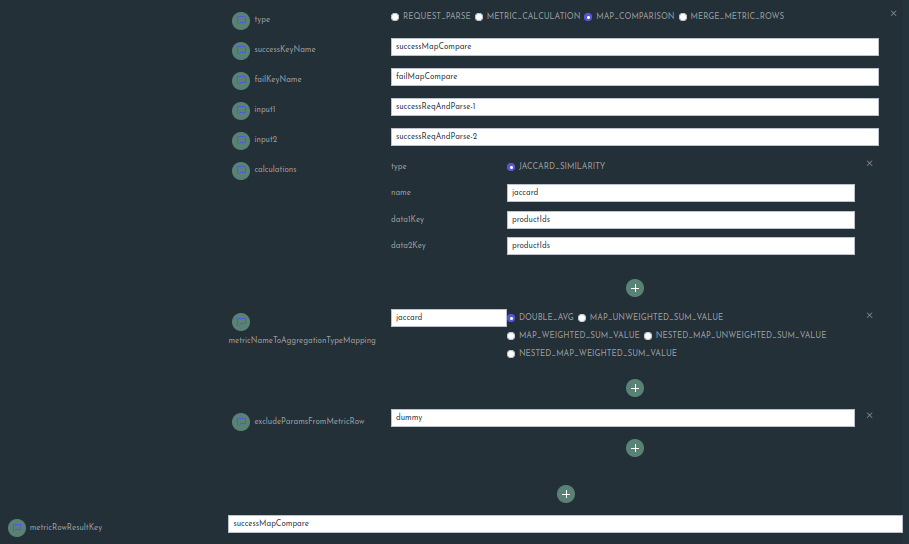

MAP_COMPARISONtask that specifies two input keys referring to the results corresponding to the distinct search system (remember the index-suffix in the previous task definition, thus suffix-1means the first connection specified in the connection-list,-2means the second and so on) - (Optional): we can define multiple

MAP_COMPARISONtasks and compare multiple distinct systems. If we do so, we do not have a single result, but multiple corresponding to the distinct comparisons. In this case we need to use aMERGE_METRIC_ROWStask to fill those values into a single result, whose key can then be referenced in themetricRowResultKeysetting of the job definition. The value for this key is actually what will be written as (partial) result for the defined tag (in the below example we tag by dummy variable since we do only want to have a single file to avoid having one file per query where the content is simply a one-liner, but this is an optional consideration).

Specifying general job settings

Specifying the REQUEST_PARSE task

Specifying the MAP_COMPARISON task

After submitting the job and starting it on the STATUS page, shortly after you should see results written in the

current date folder within the configured results subfolder. Result files come in two flavors, CSV and json.

Below two examples of the resulting format for the job definition above.

CSV

Note that the format contains a few comment lines (starting with #). These contain important information

for aggregation of data. In this case we have a DOUBLE as type of the metric jaccard as given in the column

value-jaccard. Thus the header information starting with # K_METRIC_AGGREGATOR_MAPPING just specifies

that the metric jaccard is to be aggregated as average over values of type double (DOUBLE_AVG)

# K_METRIC_AGGREGATOR_MAPPING jaccard DOUBLE_AVG

k1 q fail-count-jaccard weighted-fail-count-jaccard failReasons-jaccard success-count-jaccard weighted-success-count-jaccard value-jaccard

v1&v2 q1 0 0.0000 1 1.0000 0.2667

v1&v2 q5 0 0.0000 1 1.0000 0.4118

v1&v2 q3 0 0.0000 1 1.0000 0.1333

v1&v2 q4 0 0.0000 1 1.0000 0.4286

v1&v2 q2 0 0.0000 1 1.0000 0.3333

JSON

{

"data": [

{

"datasets": [

{

"data": [

0.3333333333333333,

0.42857142857142855,

0.4117647058823529,

0.13333333333333333,

0.26666666666666666

],

"failReasons": [

{},

{},

{},

{},

{}

],

"failSamples": [

0,

0,

0,

0,

0

],

"name": "jaccard",

"successSamples": [

1,

1,

1,

1,

1

],

"weightedFailSamples": [

0.0,

0.0,

0.0,

0.0,

0.0

],

"weightedSuccessSamples": [

1.0,

1.0,

1.0,

1.0,

1.0

]

}

],

"entryType": "DOUBLE_AVG",

"failCount": 0,

"labels": [

{

"k1": [

"v1",

"v2"

],

"q": [

"q2"

]

},

{

"k1": [

"v1",

"v2"

],

"q": [

"q4"

]

},

{

"k1": [

"v1",

"v2"

],

"q": [

"q5"

]

},

{

"k1": [

"v1",

"v2"

],

"q": [

"q3"

]

},

{

"k1": [

"v1",

"v2"

],

"q": [

"q1"

]

}

],

"successCount": 1

}

],

"name": "(dummy=1)",

"timestamp": "2023-08-09 09:32:55.034"

}

Troubleshoot

In case you experience some issues (e.g storing job definition throws error or form content not displaying correctly after switching between job types), please refer to the troubleshoot section.