Kolibri Documentation

Kolibri - The Execution Engine that loves E-Commerce Search

Kolibri is the german word for hummingbird. I picked it as project name to reflect the general aim to do many smaller things fast. And this describes the batch processing logic still quite well.

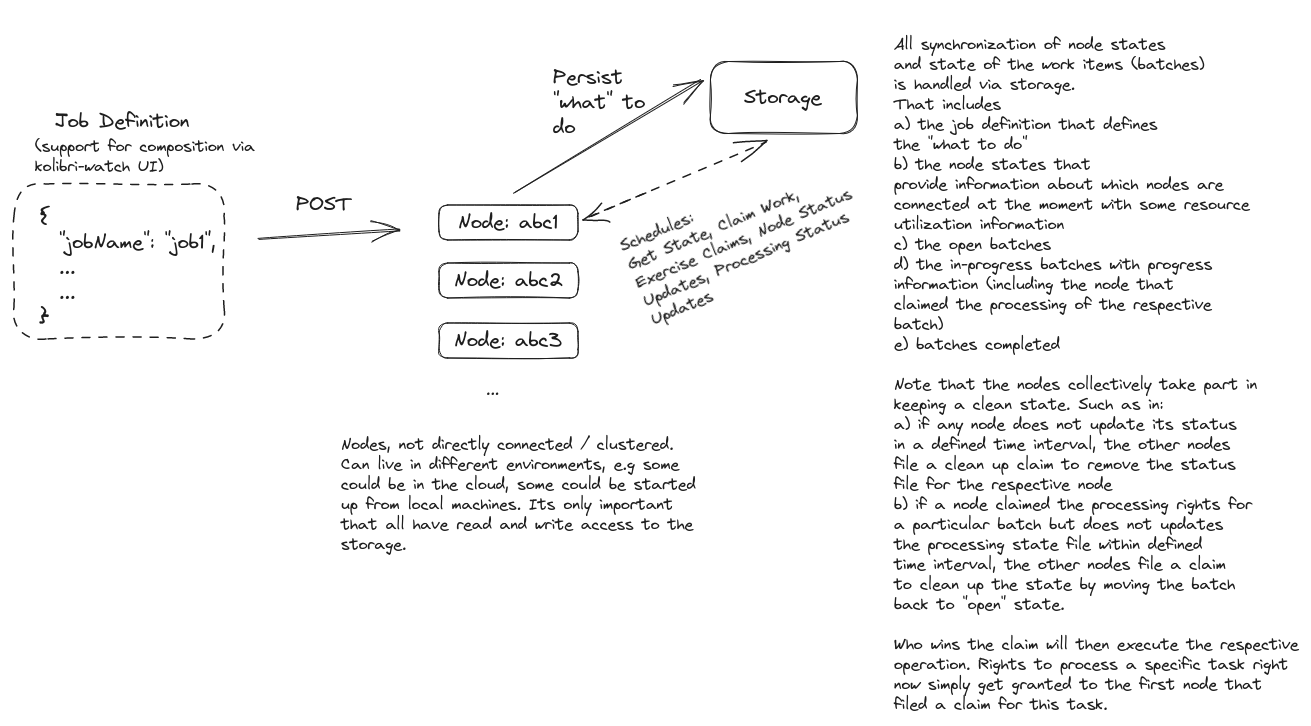

Built in Scala, based on the ZIO framework, Kolibri provides easy-to-use mechanisms to compose computing tasks, define how to batch the tasks, do grouped aggregations and represent these aspects in such a way that the jobs can easily be distributed among the worker-nodes, which do not need to be tightly coupled in a cluster setting, but perform all necessary synchronizations via the used storage. The worker nodes do not need to live in the same environment, so whether it is purely local testing on one machine, testing with colleagues on a combination of local machines, cloud deployments or a mixture thereof - the only thing that matters is that the nodes have read and write access to the storage. This could either be a file-based such as local file system, s3, gcs, or any other cloud storage that simulates a file system or databases such as redis. Right now only local file system and s3 are implemented, more are expected to follow.

Further, while the framework is usable for all kinds of computations, its main focus in terms of pre-defined jobs

is on e-commerce search related functionality to ease evaluation of results. For this certain tasks are

already implemented, such as:

- Flexible definition of permutations, including the possibility to restrict the range of values based on the value of another parameter, restricting grid-search computations to the actually needed set of combinations. Changeable parameters in requests include all aspects, such as url parameters, headers, body.

- Requesting a service via http/https and parsing needed fields out of it, providing easy-to-define syntax for what to parse instead of needing to add any parsing logic yourself.

- Judgement-list based calculation of common information retrieval metrics (such as DCG, NDCG, ERR, Precision, Recall)

- Requesting and comparing distinct search systems in terms of result overlap (jaccard distance).

- Computing the results with attached tags, where partial results are written on the go instead of waiting for the whole.

- Aggregations of partial results with the option to apply a weight to different results (such as weighting down results belonging to lower-traffic queries)

Why the … should you use this?

You might want to consider Kolibri if you:

- Need a lean mechanism to process many samples but do not want to deploy any additional setup such as queues or databases (using them might become an option at some point, but you won’t need them)

- Need a convenient mechanism to define permutations of parameters, including making values conditional on the value sampled for another parameter, to effectively limit the computation needed for grid-search.

- Want a UI coming with it that gives you control over the tooling

- Want to try it out from local, from many machines in a group of co-workers or in a combination of cloud-deployed machines and local machines. There is no distinction made between where a machine sits, as long as it has access to the resources needed for computation and the configured storage over which state information is synced.

- Are working in search / e-commerce search and do not want to write the Nth framework for evaluation of

search system results, such as

distributions of result attribute valuesas inSTRING_SEQUENCE_VALUE_OCCURRENCE_HISTOGRAMor comparing results of two search systems with aJACCARDmetric, or information retrieval metrics such asDCG,NDCG,PRECISION,RECALL,ERR, general metrics such asIDENTITY(when extracting single attributes, such as numDocs),FIRST_TRUE,FIRST_FALSE,TRUE_COUNT,FALSE_COUNT,BINARY_PRECISION_TRUE_AS_YES,BINARY_PRECISION_FALSE_AS_YES- also want convenient support in specifying experiments, by defining the target system, permutations of different types of request modifiers (allowing adjustment of url parameters, headers, body), the fields that need to be parsed from the responses and what to do with them (e.g which metrics to calculate)

- also need tagging/grouping of partial results

- want to aggregate partial results for an overall look at the data and be able to apply distinct weights per result group (such as weighting down the results of less frequent queries)

- need a nice visualization of results (pending to be activated and extended now that the rewrite is complete)

- Are not working in the above field but need a service that conveniently allows definition of all kinds of processing steps to define workflows.

- … jeah, just use it!

Schematic overview:

A short description of the single libraries is given in the following.

In the further sub-sections of this documentation you will find step-by-step descriptions on how to use kolibri.

Kolibri DataTypes

This library contains basic datatypes to simplify common tasks in batch processing and async state keeping.

Kolibri Storage

This library contains the storage implementations, such as file-based local disc, cloud-based such as AWS and GCP, and will likely contain non-file-based implementations such as redis at some point.

Kolibri Definitions

Contains the actual job definitions without the actual execution mechanism, to provide the parts that can be utilized / processed using in a respective service such as kolibri-fleet-zio.

Kolibri Fleet ZIO

Kolibri Fleet ZIO provides a multi-node batch execution setup.

Batch definitions are flexible and make use of

Akka-Streams, allowing the definition of flexible execution flows.

Results are aggregated per batch and on demand aggregated to

an overall result.

Features include:

- Storage-based task queue, no need for additional deployments of queue system or databases.

- Synchronization of nodes via storage, thus it does not matter where nodes are located. They only need access to the selected storage. No need for direct node-to-node connections / tight clustering. This means you can just spin up your own local machine or several local machines in your office or spin up multiple nodes in the cloud or run all of them at the same time. In case some job shall not be computed using the resources of all connected nodes, this can be achieved by modifying the stored directive (that either indicates “processing for all”, “only process on node X”, “stop processing” and similar directives)

- Easy definition of datasets / permutations / tags / groups

- Mechanisms to split those sets into smaller batches

- Storage-based negotiation logic of single nodes to claim rights to process batches / single actions (such as cleaning up after a node that went offline) including state handling and collection of partial results

Use case job definitions include:

- Search parameter grid evaluation with flexible tagging based on request (e.g by request parameter), result (e.g size of result set, other characteristics of the search response) or actually derived metrics (given by a MetricRow object) result. Tagging allows separation into distinct aggregations based on the concept a tag represents.

Kolibri-Fleet-ZIO on DockerHub

Kolibri Watch (UI)

Kolibri Watch provides a UI for the Kolibri project, allowing monitoring of job execution progress,

definition of the executions and submission to the Kolibri backend for execution.

Response Juggler

Response Juggler is only listed here as mock service for trying out kolibri.

You will find an example configuration in the docker-compose file in the root of the kolibri-project.

It is used there to mimick a search service by sampling results that are then processed according

to the job definition posted via kolibri-fleet-zio.

This way you can directly out of the box try out whether handling of a specific response format

is correct and/or execute benchmarks.

It allows definition of a main template that defines how a response is supposed to look

and definitions of the placeholders (enclosed in {{ and }}), for example:

{

"response": {

"docs": {{DOCS}},

"numFound": {{NUM_FOUND}}

}

}

The placeholders need to have a sampling mechanism configured. For example {{DOCS}} could

be configured to map to a partial-file that defines the following json format:

{

"product_id": {{PID}},

"bool": {{BOOL}},

"string_val_1": {{STRING_VAL1}}

}

Since this last json snippet is already at the leave level and no place-holder comes below, we can configure specific values for them instead of other json values with other placeholders. A specific value sampling definition can look similar to this one (values to left just refer to set environment valiables, where the right side describes the set value for that variable):

RESPONSE_FIELD_IDENT_STRING_VAL1: "{{STRING_VAL1}}"

RESPONSE_FIELD_SAMPLER_TYPE_STRING_VAL1: "SINGLE"

RESPONSE_FIELD_SAMPLER_ELEMENT_CAST_STRING_VAL1: "STRING"

RESPONSE_FIELD_SAMPLER_SELECTION_STRING_VAL1: "p0,p1,p2,p3,p4,p5,p6,p7,p8,p9,p10,p11,p12,p13,p14,p15,p16,p17,p18,p19"

The above specifies that the placeholder {{STRING_VAL1}} corresponds to a single (SINGLE) value,

which is of type string (STRING) and is samples out of a comma-separated selection

among the values p0,p1,p2,p3,p4,p5,p6,p7,p8,p9,p10,p11,p12,p13,p14,p15,p16,p17,p18,p19.

For further information on usage, refer to below reference to the project page.